Late last month, a cybersecurity research firm alleged that security vulnerabilities in a connected cardiac medical device – a “smart” pacemaker and monitor combo – made by St. Jude Medical might put patients’ lives at risk.

Late last month, a cybersecurity research firm alleged that security vulnerabilities in a connected cardiac medical device – a “smart” pacemaker and monitor combo – made by St. Jude Medical might put patients’ lives at risk.

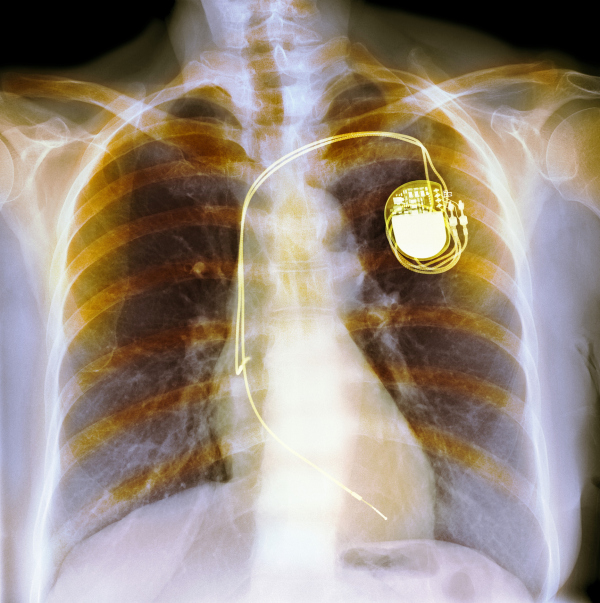

Once standalone, many pacemakers now include wireless remote monitoring that enables physicians to optimize treatment and rapidly detect hardware problems. The pacemaker uses short-wave radio to communicate to a gateway or mobile device, which conveys that data to caregivers over Wi-Fi or cellular connections.

The alleged vulnerabilities exploit security flaws to crash the implantable pacemaker or drain its battery. Either could be fatal to patients whose heart can’t beat correctly without a functional pacemaker. (I learned this fact from my father, who has a pacemaker from a different manufacturer.)

It’s worth noting here that the cybersecurity researchers sold their findings to a hedge fund, which shorted St. Jude’s stock, and the security research firm is being compensated by the fund’s performance. Also, St. Jude and other researchers are disputing the claims.

While much will be debated about the veracity, legality, and ethics of these researcher and hedge fund activities, my most important takeaway is that it reinforces the urgent need for open security assessment programs for connected medical devices, which we increasingly depend upon for our health and privacy.

What Regulators are Doing

What Regulators are Doing

Government regulators haven’t been standing idly by as more and more connected devices, including diabetes insulin pumps, hospital infusion pumps, and wireless cardiac devices, get into the market and into our bodies.

The U.S. FDA has provided pre- and post-market cybersecurity guidance and played an active role in community discussions, conferences, and other activities that help us work together to address cybersecurity challenges. In addition, the U.S. National Institute of Standards and Technology (NIST) has created a cybersecurity framework for critical infrastructure developers, including medical/healthcare device makers.

This is all well and good, but the FDA and NIST currently do not implement or mandate an actual security assessment program for connected medical devices. NIST says it has “no plans to develop a conformity assessment program.” Rather “NIST encourages the private sector to determine its conformity needs, and then develop appropriate conformity assessment programs.”

What should an Assessment Program look like?

It should be:

- Open and inclusive (international, all stakeholders)

- Risk-based approach to objectives and functional requirements definition

- Scientific approach to evaluation

- Efficient (cost and time)

- Adaptive (continuous improvement, assurance maintenance program)

The benefits for medical device makers are numerous, including:

- Ability to obtain assistance in determining an appropriate set of security controls that meet the needs of all stakeholders;

- Ensure security efforts are assessed and confirmed by independent cybersecurity experts;

- Provide patients, caregivers, and other stakeholders confidence in security by documenting which products have been successfully assessed, and all of the details around how;

- Reduce legal, financial, and brand damage risk by demonstrating they have put their products through the commercial best practice of an open, standardized, independent security assessment process;

And of course there are benefits to other stakeholders, including:

- Let insurers more accurately assess cybersecurity risk and offer optimized insurance plans based on assessment results, reducing financial risk for insurers, manufacturers, hospitals, and patients;

- Enable caregivers and patients to choose devices wisely and reduce cybersecurity risk.

A Path Forward for Medical Device Makers

Product security assessment organizations, standards, and programs exist in a variety of industries outside of healthcare, including payment smart card microchips and firmware (e.g. EMVco) and national security information systems (e.g. FedRAMP, NIAP, and FIPS 140-2).

Until recently, there were no security assessment programs for the medical device industry. In May, this changed when the non-profit Diabetes Technology Society released the DTSec security standard and assessment program to evaluate the security of insulin pump controllers and other network-connected medical devices.

DTSec is an open, independent security assessment based on commercial best practices. It was developed through a collaboration of medical device makers, medical researchers in academia, government regulatory agencies, patient representatives, cybersecurity experts, attorneys, and others. BlackBerry’s Center for High Assurance Computing Excellence (CHACE) is a lead author of the standard, as well as a member of the assessment program committee.

DTSec serves as a model for any industry to offer the security confidence we need in connected systems. (If you’re interested in talking about expanding this model to other types of medical devices or other kinds of products, please email me at highassurance@blackberry.com.)

Last year it was hospital infusion pumps. Last week we had cardiac implantables. One thing we can be sure of is that soon there will be another disturbing report of vulnerable life-critical medical devices that further erodes consumer confidence. The only way we will gain back this confidence and move past the “he said, she said” nature of vulnerability reports today, as we’re seeing play out in the St. Jude Medical incident, is to put in place open, independent security assessment programs. By using the DTSec model, we can ensure caregivers and patients will not incur undue risk from lack of awareness about which products are secure and which are not known to be secure.