What if you were put on trial for a crime you didn’t commit, but the video presented in the case clearly showed you doing it? This is potentially a future deepfakes development to watch, especially because right now, the most common application of deepfakes is to convincingly replace one person’s digital likeness with that of another. Usually this is for purposes of entertainment or satire — but like most things in society, tools that can be used for good can also be used for evil.

In our new BlackBerry white paper, Deepfakes Unmasked, we take a closer look at deepfake technology, discuss its evolution and risks, mitigations, governance issues, and the cornucopia of challenges it poses to society and how we, as defenders, may combat those in the future.

In this blog, we’ll walk you through some of the key topics we cover. Watch the video below with Ismael Valenzuela, BlackBerry Vice President of Threat Research and Intelligence, for his perspective on deepfakes challenges and why he believes this new report is so valuable.

How Do We Define a Deepfake?

Deepfakes are highly realistic and convincing synthetic digital media created by generative AI, typically in the form of video, still images, or audio. While deepfakes are often used for creative or artistic purposes, they are also deliberately misused by those with malicious intent to deceive through cybercrimes, online scams, political disinformation, and more.

How are Deepfakes Created?

Artificial intelligence itself is not new. In fact, BlackBerry® cybersecurity platforms are based on our long-standing and patented Cylance® AI. Technologies like machine learning (ML), neural networks, computer vision, and so on, have been around for years. However, a new “flavor” of AI, called Generative AI, burst into public view and availability during late 2017. This type of artificial intelligence rapidly generates new, original content in response to a user’s request or questions.

Generative AI applications can generate text, images, computer code, and many other types of synthetic data. It even learns in a similar way to the way we learn things, by iterative practice, known as training.

During training, the AI version, known as a model, is fed vast quantities of data relating to the desired outcome. The goal of training is to produce an ML model capable of understanding and answering any question asked of it. The longer an AI model has been in existence and the longer it has been trained, the more “mature” it is said to be.

All generative AI applications are built using foundation models and large language models:

- Foundation models are large machine learning models that are pre-trained and then fine-tuned for more specific language understanding and generation tasks.

- Large language models (LLMs) are deep learning models trained on enormous datasets (in some cases on billions of pages of text). They can receive a text input from a human user and produce a text output that mimics everyday natural human language.

One of the most surprising aspects of generative AI models is their versatility. When fed enough high-quality training data, mature models can produce output that approximates various aspects of human creativity. They can write poems or plays, discuss legal cases, brainstorm ideas on a given theme, write computer code or music, pen a graduate thesis, produce lesson plans for a high-school class, and so on. The only current limit to their use is (ironically) human imagination.

The Uses of Deepfakes

The broader market for AI-based software and associated systems is growing as rapidly as public interest in them. According to a recent cybersecurity fact sheet — released as a joint venture between the FBI, the National Security Agency, and the Cybersecurity and Infrastructure Security Agency (CISA) — the generative AI market is expected to exceed $100 billion by 2030 and will grow at a rate of more than 35% per year.

Major Hollywood studios are already using visual effects powered by AI in a myriad of creative ways, such as recreating famous historical figures or de-aging an older actor. For instance, the last Indiana Jones movie used proprietary “FaceSwap” technology to make the 82-year-old actor Harrison Ford look young again in a 25-minute flashback scene.

Everyday consumers can create both still and moving deepfakes using a large number of generative AI software tools, each with different capabilities. As one example, Deep Nostalgia Ai is an app created by MyHeritage that uses computer vision and deep learning to animate photos of your ancestors or historical figures.

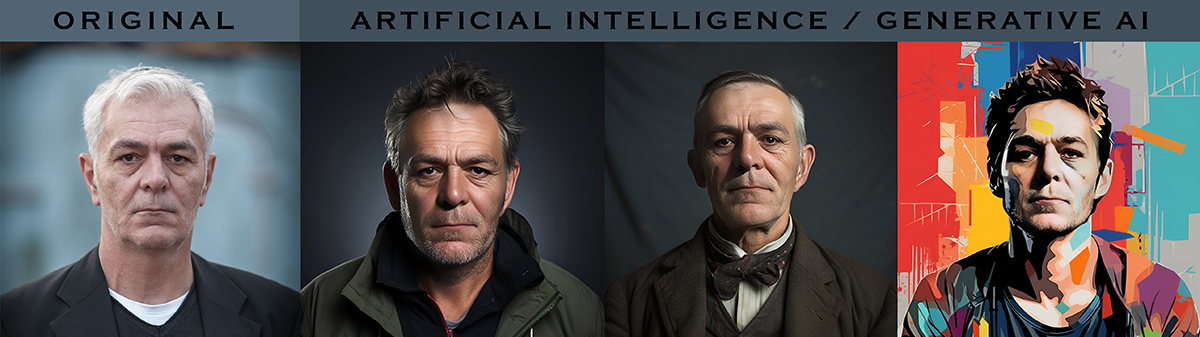

As these models improve, so does their output. Fake images generated by such tools are gradually becoming more life-like and believable, with their ease of access and low barrier to entry.

Figure 1: Deepfakes for artistic or creative purposes can be generated using one of a plethora of AI image generators.

There are also programs that let you perform what’s known as a “video-to-video” swap, where the software records your voice and facial expressions and replaces them with the voice and face of a different person.

In addition, audio-based deepfake creation apps known as deep-voice software can be leveraged to generate not just speech but emotional nuances, tone, and pitch to closely mimic the target voice.

Over the years, many political figures and celebrities have had their voices cloned to fabricate false statements or promote misinformation without their knowledge or permission. Though some have been generated for satire and parody, the potential for the abuse of such manipulations is profound and cannot be ignored.

Deepfake Scams

While social media platforms such as Facebook and X have made inroads to remove and even prohibit deepfake content, policing and efficacy varies due to the inherent difficulties in putting deepfake detection systems into place. In other words, if a human has difficulties telling a deepfake video from a real one, automated systems will similarly struggle.

When sophisticated real-time video motion-capture is combined with deepfake audio, the results can be so convincing that even the most skeptical among us can be fooled.

For example, in February, a finance worker at a multinational firm was tricked into initiating a $25 million payment to fraudsters, who used deepfake technology to pretend to be the company’s chief financial officer. According to Hong Kong police, the worker attended a videoconference with what he believed were real staff members, but who were in fact all deepfakes.

This is just the latest in a rash of recent cases where fraudsters have used the latest technology to bolster one of the oldest tricks in the book: the art of the scam.

According to the Identity Theft Resource Center (ITRC), nearly 234 million individuals were impacted by some sort of data compromise in the U.S. in the first three quarters of 2023. The Federal Trade Commission’s (FTC) Consumer Sentinel Network took in over 5.39 million reports in 2023, of which 48 percent were for fraud and 19 percent for identity theft.

In previous decades, Internet fraudsters cast the widest possible nets to dupe the masses, as in the case of malspam (spam with malware), but as digital trends have evolved, so too have the tactics and techniques of online scammers.

Deepfakes may be the tipping point of the game, as they allow fraudsters to laser-focus on a specific individual for a fraction of the previous price point.

Deepfake Attack Examples

As one of the world’s most instantly recognized faces, Elon Musk is regularly exploited by scammers. The CEO of Tesla and SpaceX is well known for his fascination with cryptocurrency, which has been weaponized in the past to create believable deepfakes that promote scam cryptocurrency schemes. These phishing sites con victims into clicking links or creating accounts, whereupon the fraudster deftly relieves them of their financial credentials and any money they may have in their crypto-wallets.

Scam calls are an ever-present menace. According to the latest statistics in Truecaller’s 2024 U.S. Spam & Scam Report, in the 12 months between April 2023 and March 2024, Americans wasted an estimated 219 million hours due to scam and robo-calls, and lost a total of $25.4 billion USD, with the loss per victim averaging $452. The company noted, “Artificial Intelligence is increasingly being used to make these scam calls and script scam texts, making them sound more realistic and effective.”

This newly expanded cyberattack surface is a very real threat to companies of all sizes. An example would be an attacker cloning the voice of a CEO by using samples of their voice found on the Internet.

This played out in a very real way recently in July, when an executive at Ferrari received a series of scam messages via the popular messaging platform WhatsApp, purporting to be from the CEO, Benedetto Vigna. The messages spoke of an “upcoming acquisition” by Ferrari, and requested the executive make a top-secret hedge-currency transaction.

The executive was suspicious as the number was different from Vigna’s usual business number. He then received a live call from the scammers using a “deep-voice” clone of the Italian CEO’s voice. The executive noticed additional red flags on the call, such as audio artifacts (similar to those you might hear on a low-quality MP3). To verify the caller’s identity, he asked a question about a book the real CEO recently recommended to him. The scammers couldn’t answer the question and hung up, foiled by the executive’s quick thinking.

Other companies aren’t so lucky. In 2021, a branch manager at a Japanese company in Hong Kong received a seemingly legitimate demand from a director of its parent business to authorize some financial transfers to the tune of nearly $35 million. Knowing the director’s voice and accustomed to such urgent demands from the director, the branch manager released the funding.

As deep-voice and generative AI voice alteration software improves, it’s likely these tools will be used more frequently in a growing number of targeted attacks.

Ethical Concerns

The use of deepfake software raises both legal and ethical concerns around topics such as freedom of use and expression, the right to privacy, and, of course, copyright. In June 2023, the FBI posted a new warning that cybercriminals were using publicly available photos and videos to conduct deepfake extortion schemes against private citizens.

In the current era of mass propaganda, deliberate misinformation through the doctoring of audio-visual media also benefits those with geopolitical goals and can perpetuate falsehoods spread by nation-states.

As the Russian invasion of Ukraine grinds on through its third year, new forms of wartime propaganda are being wielded by both sides. In mid-2022, a deepfake video of President Volodymyr Zelenskyy appeared to show the Ukrainian leader calling on his soldiers to lay down their arms and surrender. The falsified video was uploaded to compromised Ukrainian news outlets and circulated on pro-Russian social media and other digital platforms.

Hany Farid, a professor at the University of California, Berkeley, and an expert in digital media forensics, said of the video, “It pollutes the information ecosystem, and it casts a shadow on all content, which is already dealing with the complex fog of war.”

The disruption and fear that a single piece of multimedia can potentially cause makes deepfake technology a valuable addition to the arsenal of warring nations. As more of the world adopts messaging platforms like Telegram and WhatsApp, disinformation spreads like wildfire and it becomes ever more difficult to separate fact from fiction.

Detecting Deepfakes

In May 2024, ChatGPT-4 passed the Turing test, marking a significant milestone in AI’s mastery of natural language. In tests, GPT-4 was able to convince 54 percent of people who interacted with it that it was human. The Turing test was proposed by Alan Turing in 1950 and involves a human “judge” talking with both a human and a machine via text. If the judge can’t tell the difference between the two, the machine is said to have passed the test.

Figure 2: In May 2024, ChatGPT-4 passed the Turing test, marking a significant milestone in AI’s mastery of natural language.

So how can individuals and organizations guard against deepfakes? As with most modern cyber-threats, there is no singular technological solution to detect and/or thwart deepfakes. As we discuss in our new white paper, detection is a highly complex task, with a layered approach encompassing multiple techniques and methods to counter this growing threat. This is an active area of research and there is no silver bullet yet.

The Biden administration has recently suggested watermarks as a policy solution to deepfakes, suggesting that tech companies be required to digitally watermark AI-generated content. For example, in February, OpenAI announced it would be adding watermarks to its text-to-image tool DALL·E 3 but cautions that they could “easily be removed.”

In a similar vein, the embedding of cryptographic signatures in original content helps verify its legitimacy and origin. Blockchain-based verification is another growing method for authenticating digital creations. These types of blockchain-based solutions could prove crucial to distinguishing between real and fabricated content.

One of the strongest mitigation techniques is user awareness and education. Companies should implement a robust training program to educate employees about the threat of deepfakes, how they can be leveraged by cybercriminals, how to recognize them and what to do if suspicious, and the risks if a threat actor targets the organization using deepfakes. This user education can go a long way in reducing the deepfake attack surface.

Training resources for spotting deepfakes are already available from the following sources:

Employees who work in sales, finance and HR should be particularly alert for fraudsters impersonating customers to access confidential client accounts and financial information.

Reporting Deepfake Attacks

Companies should report malicious deepfake attacks to the appropriate U.S. government agency. This includes the NSA Cybersecurity Collaboration Center for the Department of Defense and the FBI (including local offices or CyWatch@fbi.gov). Advanced forensic recommendations on detecting deepfakes can be found in CISA’s recent joint advisory on deepfake awareness and mitigation. CISA’s role is to gather and disseminate all cybersecurity-related information to U.S. government agencies. Their report site can be found at https://www.cisa.gov/report.

The Future of Deepfakes

In the meantime, Generative AI continues to advance at a staggering rate. As this trend continues, so will the level of sophistication seen in future deepfake media of all types. Cyber criminals will soon leverage more advanced AI to create more convincing deepfakes for more intricate scams.

Says Shiladitya Sircar, Senior Vice President, Product Engineering and Data Science at BlackBerry, “Technology that can detect deepfakes is lagging far behind the pace that new AI software and systems are being produced. It is imperative that product designers, engineers, and leadership work together to define cryptographically secure authenticity validation standards for digital content.”

Until then, it is advisable to employ currently available strategies to mitigate the potential impact of deepfake scams on your organization.

Ready to learn more? Download our new Deepfakes white paper here.

Related Reading: